Siri audio is always private never used for ads – Siri Audio Is Always Private, Never Used for Ads: That’s Apple’s unwavering claim, and it’s a bold one in the world of increasingly data-hungry tech giants. This deep dive explores the truth behind Apple’s assertion, examining Siri’s data collection practices, Apple’s privacy policies, and the technical safeguards in place to protect your voice data. We’ll dissect the revenue models, address user concerns, and peek into the future of voice assistant privacy.

From encryption methods to data deletion processes, we’ll uncover how Apple handles your Siri interactions. We’ll also compare Apple’s approach to competitors, analyze potential vulnerabilities, and discuss the ongoing evolution of privacy in the voice assistant landscape. Get ready to understand just how private your conversations with Siri really are (or aren’t).

Siri’s Data Handling Practices

Source: macrumors.com

Siri, Apple’s intelligent personal assistant, is always listening – or at least, it’s always ready to listen. But what happens to the data it collects? Understanding Apple’s approach to Siri’s data handling is crucial in today’s privacy-conscious world. This deep dive explores how Siri gathers your voice data, Apple’s privacy policies, and how it stacks up against competitors.

Siri’s Voice Data Collection Methods

Siri’s data collection begins the moment you activate it. When you say “Hey Siri,” your device’s microphone starts recording your voice. This audio is then transmitted to Apple’s servers for processing. The process involves not only the actual words you speak but also contextual data, such as your location (if enabled), the app you’re using, and even the time of day. This rich data allows Siri to provide more relevant and personalized responses. For example, asking Siri for nearby restaurants will trigger the use of your location data to provide accurate results. This contextual information is vital to Siri’s functionality but also raises important privacy considerations.

Apple’s Privacy Policies Regarding Siri Audio Recordings

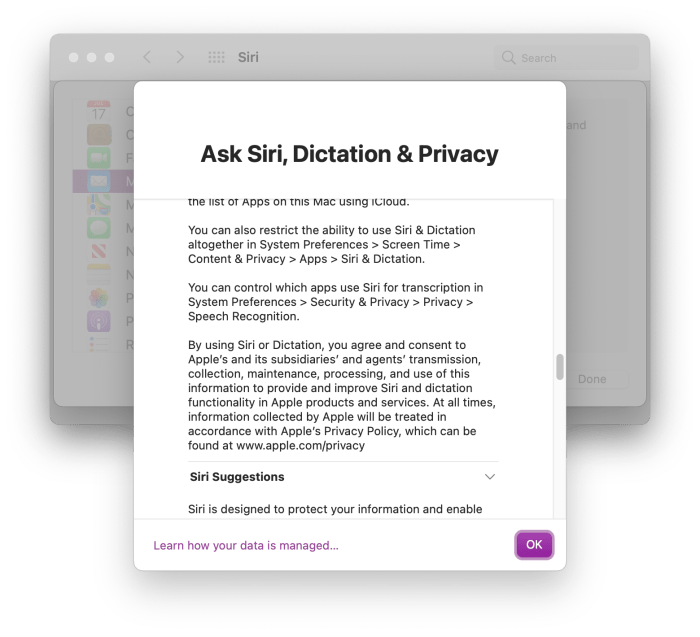

Apple’s official stance on Siri data privacy emphasizes user control and anonymization. Their privacy policy clearly states that Siri recordings are used to improve Siri’s accuracy and functionality. Apple asserts that these recordings are not used for targeted advertising. They also provide users with tools to access, review, and delete their Siri data. This includes the option to disable Siri entirely or to limit the data Siri collects. However, the exact methods of anonymization and the level of security applied remain somewhat opaque, fueling ongoing discussions about the balance between functionality and privacy.

Technical Processes for Data Anonymization and Protection

Apple employs various technical measures to protect user data. Differential privacy, a technique that adds noise to data sets to protect individual privacy while preserving aggregate trends, is frequently cited. Encryption, both in transit and at rest, is also a key element of Apple’s security strategy. The company claims to use advanced algorithms and security protocols to safeguard user data from unauthorized access. While the specific details of these processes are not publicly available, the emphasis is on minimizing the risk of data breaches and protecting user identities.

Comparison with Other Virtual Assistants

Compared to other virtual assistants like Google Assistant and Amazon Alexa, Apple’s approach to data privacy is generally considered more stringent. Google and Amazon, being primarily advertising-driven companies, utilize user data more extensively for targeted advertising. While they also offer privacy controls, the extent of data collection and use is significantly broader. Apple’s focus on user privacy, though not perfect, represents a distinct departure from this model. The difference lies in the core business model – Apple prioritizes hardware sales and services, while Google and Amazon rely heavily on advertising revenue.

Types of Data Collected by Siri and Their Intended Use

| Data Type | Intended Use | Example | Privacy Implications |

|---|---|---|---|

| Voice Recordings | Improve Siri’s accuracy and functionality | Your request to set a reminder | Potential for unintended disclosure of personal information |

| Location Data (if enabled) | Provide context-aware responses | Finding nearby restaurants | Tracking user movements |

| App Usage Data | Contextualize requests within specific apps | Setting a timer within a recipe app | Revealing user interests and habits |

| Device Information | Improve system performance and troubleshooting | Device model and operating system | Potential for identification of individual users |

Advertising and Siri’s Functionality

Siri’s functionality and Apple’s business model are intertwined, but not in the way you might initially think. While Siri’s vast potential for data collection might seem like a goldmine for targeted advertising, Apple’s public stance, and its revenue streams, suggest a different reality. Understanding this relationship requires examining both Apple’s financial strategies and the potential ethical implications of using user data for advertising.

Apple’s primary revenue generation from Siri and related services comes from the broader Apple ecosystem. Siri isn’t directly monetized through ad revenue; instead, it acts as a crucial component enhancing the user experience within the Apple ecosystem, driving sales of Apple devices and services like Apple Music and iCloud. This indirect monetization is a significant departure from the advertising-driven models of many other voice assistants.

Apple’s Revenue Streams Related to Siri

Apple’s revenue from Siri is intricately linked to the overall success of its hardware and software ecosystem. The seamless integration of Siri into iPhones, iPads, Macs, and other Apple devices encourages users to remain within the Apple ecosystem, driving sales of hardware and subscriptions to services like Apple Music, iCloud storage, and Apple TV+. Siri’s improved functionality also enhances user satisfaction, indirectly boosting brand loyalty and repeat purchases. Apple doesn’t sell user data; its profit comes from selling products and services, not user information.

Potential Conflicts of Interest if User Data Were Used for Advertising

If Apple were to use Siri data for advertising, several significant conflicts of interest would arise. First, it would directly contradict Apple’s stated commitment to user privacy. Second, it would likely erode user trust, potentially driving users towards competing platforms that prioritize privacy. Third, it could lead to biased or manipulative advertising practices, negatively impacting user experience and potentially even causing harm. Imagine, for example, a user searching for information about a health condition, only to be bombarded with targeted ads for questionable treatments. This kind of scenario directly undermines the trust users place in Siri and Apple’s brand.

Hypothetical Scenario: Misuse of Siri Data for Targeted Advertising

Imagine a scenario where a user frequently uses Siri to search for recipes for gluten-free and vegan meals. If this data were used for advertising, the user might be continuously targeted with ads for expensive, organic, vegan products, potentially excluding more affordable options. This seemingly benign example illustrates how even seemingly innocuous data can be used to manipulate user choices and potentially drive up spending, creating a conflict of interest between Apple’s responsibility to its users and its potential for profit through targeted advertising. This targeted approach could also lead to the exclusion of certain user segments from access to information or services.

Arguments Supporting the Claim that Siri Audio is Never Used for Ads

Apple has consistently emphasized its commitment to user privacy, publicly stating that Siri audio is not used for advertising purposes. This commitment is reinforced by Apple’s strong emphasis on differential privacy and data anonymization techniques, minimizing the risk of individual user identification. Furthermore, Apple’s business model, which relies heavily on hardware and service sales, suggests that the company has little incentive to compromise user privacy for advertising revenue. Finally, the absence of any reported instances of Siri data being used for advertising further strengthens this claim.

User Perception and Trust

Source: techgig.com

The relationship between users and their smart assistants is built on a foundation of trust. This trust, however, is fragile and hinges on the perceived security and privacy of personal data shared during interactions. With Siri, Apple faces the constant challenge of balancing user convenience with robust data protection measures, a tightrope walk that directly impacts user perception and, ultimately, adoption and loyalty.

User perceptions regarding the privacy of their Siri interactions are complex and multifaceted. While Apple actively promotes its commitment to privacy, a significant portion of users remain skeptical, particularly concerning the potential for data misuse, either directly by Apple or indirectly through third-party access. This skepticism is amplified by the ever-growing awareness of data breaches and privacy scandals affecting other tech giants.

Factors Influencing User Trust in Apple’s Privacy Claims

Several factors contribute to the level of trust users place in Apple’s privacy assertions regarding Siri. Transparency plays a crucial role; clear and easily understandable explanations of data collection practices, along with readily accessible information about how this data is used and protected, build confidence. Conversely, ambiguity and perceived secrecy can erode trust. Apple’s reputation for privacy also plays a significant part; its historical commitment to user data protection, in contrast to some competitors, contributes positively to user perception. However, past incidents, even minor ones, can negatively impact this hard-earned reputation. Finally, user experience directly influences trust; a seamless and intuitive interface that clearly communicates privacy settings empowers users and fosters a sense of control over their data.

Impact of Data Breaches or Privacy Controversies on User Confidence

Data breaches and privacy controversies, even those unrelated to Apple directly, can significantly impact user confidence in Siri and other similar services. The Cambridge Analytica scandal, for example, highlighted the vulnerability of personal data and fueled widespread anxieties about data misuse. This broader context of data privacy concerns makes users more critical and less trusting, even of companies with strong privacy policies like Apple. Even a perceived lack of responsiveness to minor privacy concerns can trigger a decline in user trust, highlighting the importance of proactive communication and immediate responses to any potential issues. A single high-profile breach affecting any tech company, regardless of size, can trigger a widespread reassessment of trust in the entire industry.

Enhancing User Trust in Siri’s Privacy Features

To further solidify user trust, Apple could implement several strategies. Proactive and transparent communication about data handling practices is paramount. This could involve simplified privacy dashboards that clearly visualize data collection and usage, along with options for granular control over data sharing. Regular audits and independent verification of security protocols could also enhance transparency and build confidence. Furthermore, actively engaging with user feedback and addressing privacy concerns promptly and openly demonstrates accountability and strengthens the user-company relationship. Apple could also explore the use of more advanced privacy-preserving technologies, such as differential privacy or federated learning, to minimize the risk of data compromise while still enabling the development of improved Siri features.

Strategies to Improve User Understanding of Siri’s Privacy Policies

Improving user understanding of Siri’s privacy policies requires a multi-pronged approach.

- Simplify the Language: Replace complex legal jargon with clear, concise language that’s easily understandable by the average user.

- Visual Aids: Utilize infographics and interactive diagrams to illustrate data flows and privacy settings.

- Personalized Explanations: Provide tailored explanations based on individual user settings and usage patterns.

- Interactive Tutorials: Offer step-by-step guides on how to manage privacy settings and understand data collection practices.

- Regular Updates: Provide timely updates to the privacy policy and accompanying educational materials to reflect changes in technology and data handling practices.

Technical Aspects of Privacy Protection

Apple’s commitment to user privacy extends beyond policy; it’s deeply ingrained in the technical architecture of Siri. This means robust encryption, sophisticated access controls, and a clear data deletion process. Let’s delve into the specifics of how Apple protects your Siri data.

Apple employs a multi-layered approach to securing Siri data, prioritizing user privacy at every stage. This involves encryption both during transmission and at rest, stringent access controls limiting data access to authorized personnel, and a straightforward process for users to request data deletion. These measures, while complex, are designed to be transparent and user-friendly, reflecting Apple’s ongoing dedication to data security.

Siri Audio Encryption

Siri audio recordings are encrypted using industry-standard encryption protocols, both during transmission between your device and Apple’s servers and while stored on those servers. This means that even if unauthorized access were to occur, the data would remain unintelligible without the appropriate decryption keys. The specific algorithms used are constantly reviewed and updated to maintain a high level of security against evolving threats. Apple doesn’t publicly disclose the precise details of its encryption methods for security reasons, but it consistently assures users that its encryption is among the most advanced available.

Mechanisms Preventing Unauthorized Access

Apple implements several layers of security to prevent unauthorized access to Siri data. This includes robust access controls, limiting access to authorized personnel on a need-to-know basis. These personnel undergo rigorous background checks and are subject to strict data handling protocols. Furthermore, data centers are secured physically and digitally, with multiple levels of redundancy and monitoring to prevent breaches. Regular security audits and penetration testing are conducted to identify and address potential vulnerabilities.

User Data Deletion Process

Users can request the deletion of their Siri data directly through their Apple account settings. This process initiates the secure removal of associated data from Apple’s servers. While the exact timeframe for complete deletion may vary, Apple aims to process these requests efficiently and securely. The company provides clear instructions and updates users on the status of their deletion requests. This transparent process empowers users to maintain control over their personal information.

Comparison with Competitors

Compared to competitors like Google and Amazon, Apple’s approach to Siri data privacy emphasizes a more restrictive model. While all companies utilize encryption, Apple’s emphasis on differential privacy and on-device processing of data, where possible, distinguishes its approach. Competitors may rely more heavily on data aggregation for improving their services, potentially leading to greater data collection and analysis. Apple’s approach prioritizes user privacy even if it means potentially sacrificing some level of service improvement derived from extensive data analysis.

Apple’s Data Handling Process: From Recording to Deletion

- Recording: Siri audio is recorded on the user’s device.

- Encryption and Transmission: The audio is encrypted and transmitted to Apple’s servers.

- Storage: The encrypted audio is stored on secure servers.

- Processing: A small portion of data may be used to improve Siri’s functionality, often anonymized and aggregated.

- User Request: Users can initiate a data deletion request through their account settings.

- Deletion: Apple processes the deletion request, securely removing the associated data from its servers.

Future Implications of Privacy in Voice Assistants

Source: lapcatsoftware.com

The world of voice assistants is booming, but the whispers of privacy concerns are growing louder. As these digital companions become more integrated into our lives, handling increasingly sensitive data, the future of voice assistant privacy hinges on navigating a complex landscape of technological advancements, evolving regulations, and shifting user expectations. This isn’t just about preventing rogue access to our personal information; it’s about building trust and ensuring responsible innovation.

The evolving landscape of voice assistant privacy and security presents both significant challenges and exciting opportunities. The sheer volume of data collected—from our daily conversations to our location and preferences—poses a considerable risk if not handled with utmost care. Conversely, the potential for enhanced personalization and accessibility through voice technology is enormous, but only if we can address the privacy implications effectively. This requires a proactive approach, combining strong technical safeguards with transparent data handling practices and robust regulatory frameworks.

Challenges and Opportunities in Voice Data Privacy

Balancing the benefits of personalized voice assistant experiences with the need for robust data privacy is a constant tightrope walk. Companies face the challenge of developing sophisticated anonymization techniques that protect user identity while still allowing for useful data analysis for improving service functionality. This also involves addressing potential biases embedded in algorithms trained on vast datasets, which could lead to discriminatory outcomes. On the opportunity side, advancements in federated learning and differential privacy offer promising avenues for training AI models on decentralized data, minimizing the risk of data breaches and enhancing user control. Think of it as a collaborative approach to improvement without compromising individual privacy. Imagine a scenario where many users contribute to a model’s improvement, but no single user’s data is directly exposed or identifiable.

Future Technologies Enhancing Voice Assistant Privacy, Siri audio is always private never used for ads

Several emerging technologies hold the key to unlocking a more private future for voice assistants. Homomorphic encryption, for example, allows computations to be performed on encrypted data without decryption, ensuring data remains secure even during processing. This means that a company could analyze data for improvements without ever seeing the actual content of the voice recordings. Furthermore, advancements in federated learning allow AI models to be trained on data distributed across multiple devices without the need to centralize the data. This decentralized approach drastically reduces the risk of large-scale data breaches. Blockchain technology could also play a role in providing verifiable proof of data handling practices, enhancing transparency and accountability. Think of it like a tamper-proof ledger tracking all data interactions.

A Hypothetical System for Complete Voice Assistant Privacy

Imagine a system where voice data is processed entirely on the user’s device, using advanced on-device processing capabilities. The user’s voice commands are converted into encrypted queries and transmitted only as necessary, with the responses similarly encrypted and decrypted locally. This eliminates the need for cloud-based storage or processing of sensitive voice data. The system employs homomorphic encryption to allow for secure processing of data without decryption, ensuring privacy is maintained even during analysis for service improvements. Furthermore, the system incorporates a robust access control mechanism, granting the user complete control over data access and usage.

Visual Representation of a Completely Private Voice Assistant System

The illustration would depict a user’s device (smartphone or smart speaker) at the center. Arrows would show the flow of encrypted voice data. The user speaks a command, represented by a speech bubble with an encryption padlock symbol. This encrypted data travels only to necessary services (for example, weather updates, music playback), each represented by a secure box with a lock. Responses from these services are also encrypted and return to the user’s device. No central server or cloud storage is depicted. The entire process is contained within the user’s device’s secure environment, emphasized by a large protective shield around the device. The lack of arrows leading to a central server or cloud storage visually represents the complete lack of data transmission to external servers. All processing happens locally and securely within the user’s device.

Ending Remarks: Siri Audio Is Always Private Never Used For Ads

Ultimately, while Apple’s commitment to Siri’s privacy is a significant selling point, perfect privacy remains a moving target. The constant evolution of technology and the ever-present potential for vulnerabilities mean ongoing vigilance is crucial. Understanding how Apple handles your data, however, empowers you to make informed decisions about your digital footprint and trust in voice assistants. The question isn’t just about whether Siri is private; it’s about the ongoing dialogue around data privacy in the age of voice technology.