Chatgpt down globally 2 – Kami Down Globally 2 – the headline screamed across the internet, leaving millions scrambling for answers. This wasn’t just a minor hiccup; a two-day global outage of such a powerful AI tool sent shockwaves through businesses, research institutions, and everyday users alike. The economic impact alone is staggering, with lost productivity and potential revenue losses reaching into the billions. But beyond the numbers, the incident raises critical questions about digital dependence, system resilience, and the importance of robust contingency planning in our increasingly AI-driven world.

This unprecedented event forced a reckoning: How reliant have we become on a single technology? The outage exposed vulnerabilities in our digital infrastructure and highlighted the urgent need for better backup systems, more transparent communication strategies, and a more resilient approach to building and maintaining critical online services. The aftermath reveals a crucial lesson: we can’t afford to be caught off guard again.

Global Outage Impact: Chatgpt Down Globally 2

A two-day global outage of a widely used service like Kami would send ripples across the globe, impacting individuals and businesses in profound ways. The scale of disruption would be unprecedented, highlighting our increasing reliance on digital infrastructure and the interconnected nature of the modern world. The consequences, both immediate and long-term, are significant and far-reaching.

The widespread impact on various user groups would be immediate and substantial. Businesses relying on Kami for customer service, content creation, or data analysis would experience significant productivity losses. Students using it for research or writing assignments would face delays and potential academic setbacks. Researchers utilizing the platform for data processing and analysis would see their projects hampered. The cumulative effect of these individual disruptions would translate into a massive global economic impact.

Economic Consequences of a Two-Day Global Outage

A two-day global outage of Kami would result in substantial economic losses. Lost productivity across various sectors, from education and research to business and marketing, would be immense. For example, consider the countless businesses that use Kami for automated customer service; two days of downtime would mean a backlog of unanswered queries, potentially leading to frustrated customers and lost sales. The financial modeling sector, which frequently leverages AI for complex calculations and predictions, would also suffer significant delays and potential inaccuracies in their analyses. Estimating the precise financial impact is challenging, but considering the widespread use of Kami, it’s safe to assume the losses would run into billions of dollars globally, mirroring the scale of disruption caused by major internet outages in the past. The revenue losses for OpenAI itself would also be substantial.

Impact on User Trust and Brand Reputation

A significant service interruption like a two-day global outage would severely damage user trust and brand reputation. The initial reaction would likely be frustration and anger, amplified by the widespread nature of the disruption. Users might question the platform’s reliability and consider switching to alternative services. This loss of trust could have long-term consequences, affecting future user adoption and impacting OpenAI’s market share. The incident could also lead to increased regulatory scrutiny and potential legal challenges from users or businesses who experienced significant losses due to the outage. The recovery process would require significant investment in infrastructure improvements and transparent communication with users to rebuild confidence.

Short-Term and Long-Term Effects of a Global Outage

| Impact Area | Short-Term Effect | Long-Term Effect | Mitigation Strategy |

|---|---|---|---|

| Business Productivity | Significant loss of productivity, delays in projects, missed deadlines. | Reduced efficiency, potential loss of market share, increased operational costs. | Invest in robust backup systems, diversify AI tools, implement disaster recovery plans. |

| User Trust & Brand Reputation | Decreased user trust, negative media coverage, potential loss of customers. | Long-term damage to brand reputation, difficulty attracting new users, increased competition. | Transparent communication, proactive problem-solving, swift restoration of service, compensation for affected users. |

| Economic Impact | Billions of dollars in lost productivity and revenue across various sectors. | Slowed economic growth, potential for long-term disruption in certain industries. | Investment in resilient infrastructure, improved cybersecurity measures, government support for affected businesses. |

| Research & Development | Significant delays in research projects, hampered data analysis, potential loss of valuable data. | Slowed pace of innovation, potential for missed breakthroughs, increased research costs. | Development of robust data backup and recovery systems, diversification of research tools. |

Root Cause Investigation

A two-day global outage of a massive system like Kami isn’t just a hiccup; it’s a seismic event demanding a thorough investigation. Pinpointing the root cause requires a systematic approach, combining technical expertise with a deep understanding of the system’s architecture and dependencies. The longer the outage, the more severe the impact, highlighting the critical need for robust preventative measures.

The sheer complexity of a large-language model like Kami makes identifying the culprit challenging. Multiple interconnected systems – from the servers themselves to the network infrastructure, databases, and even the codebase – could be implicated. A cascading failure, where one component’s malfunction triggers a chain reaction across the entire system, is a plausible scenario. Imagine a single faulty server triggering a surge, overloading the network, and ultimately leading to a complete shutdown. The investigation needs to unravel this potential chain reaction to pinpoint the initial trigger.

Possible Technical Reasons for System Failure

Several technical factors could contribute to a large-scale, two-day outage. These include hardware failures (like widespread server crashes or network equipment malfunctions), software bugs (potentially in a critical component of the system), misconfigurations (incorrect settings leading to instability or unavailability), or even external factors like a Denial-of-Service (DoS) attack. The scale of the outage suggests a significant problem, potentially affecting core infrastructure or a crucial piece of software. For instance, a poorly written update to a critical database management system could cascade into widespread unavailability. A massive DDoS attack, overwhelming the system’s capacity to handle requests, is another plausible, albeit less likely, explanation given the duration.

Hypothetical Troubleshooting Process, Chatgpt down globally 2

A methodical approach is crucial. The process would likely begin with monitoring tools, analyzing logs for error messages and performance degradation indicators. This initial phase would identify affected components and pinpoint the time of the initial failure. Next, engineers would isolate the problem area, perhaps by systematically restarting components or reverting to previous software versions. This process of elimination would narrow down the potential causes. Detailed analysis of system logs, network traffic, and database activity would then provide insights into the precise nature of the failure. In parallel, stress tests on isolated components could reveal vulnerabilities or weaknesses. The ultimate goal is to recreate the failure in a controlled environment to understand the precise sequence of events leading to the outage. The entire process requires close collaboration between different teams, including infrastructure, software development, and security.

Preventative Measures to Reduce Future Outages

Preventing future outages requires a multi-pronged approach. This includes implementing robust redundancy (multiple backups of critical systems and data), rigorous testing (including stress tests and penetration testing), automated failover mechanisms (allowing the system to automatically switch to backup systems in case of failure), and a well-defined incident response plan. Regular software updates and security patches are essential to address known vulnerabilities. Furthermore, investing in advanced monitoring tools that provide real-time insights into system health can allow for proactive intervention before a minor issue escalates into a major outage. Finally, regular disaster recovery drills can help teams practice their response procedures and identify potential weaknesses in the system. Investing in these measures, while costly upfront, is significantly cheaper than the cost of a two-day global outage in terms of reputation and lost revenue.

User Response and Communication

A global service outage isn’t just a technical problem; it’s a massive communication challenge. How you handle user interaction during downtime directly impacts brand reputation and user trust. A swift, transparent, and empathetic response can turn a crisis into an opportunity to strengthen those bonds. Conversely, silence or misleading information can quickly erode confidence and lead to lasting damage.

Effective communication during a global outage requires a multi-pronged approach, leveraging various platforms to reach the widest possible audience and provide consistent messaging. Failing to do so can lead to confusion, frustration, and a flood of negative publicity. The key is proactive, honest communication that keeps users informed and manages their expectations.

Communication Strategy for Global Service Interruption

A robust communication strategy needs to be pre-planned and readily deployable. This isn’t something you want to be figuring out while your service is down. Consider a tiered approach, with immediate messaging followed by more detailed updates as information becomes available. This tiered system allows you to address immediate concerns while also preparing for longer-term communication needs. This involves using multiple channels to reach diverse user segments.

- Website Updates: A dedicated landing page should immediately inform users of the outage, providing concise information about the problem and estimated restoration time. This page should be easily accessible from all entry points to the website.

- Social Media: Platforms like Twitter, Facebook, and Instagram should be used for real-time updates, addressing user concerns and providing quick answers to frequently asked questions. Consistent messaging across all social media channels is crucial to avoid conflicting information.

- Email Notifications: For registered users, email notifications should provide more detailed updates and offer alternative solutions, if applicable. This personal touch helps maintain a sense of connection and demonstrates proactive engagement.

- In-App Notifications (if applicable): If the service is an app, push notifications can provide immediate updates to users, keeping them informed even when they aren’t actively checking the website or social media.

- Press Releases: For significant outages, consider issuing press releases to major media outlets to ensure widespread awareness and manage public perception. This demonstrates transparency and accountability.

Managing User Expectations and Alleviating Concerns

Transparency is paramount. Don’t sugarcoat the situation. Acknowledge the problem directly, provide an honest assessment of the situation, and set realistic expectations for restoration. Regular updates, even if they don’t contain significant new information, are crucial to keep users informed and prevent speculation. Regular updates should emphasize empathy and understanding, acknowledging the inconvenience the outage causes. Consider including a personal touch from leadership, showing the company is invested in resolving the issue.

For example, during a prolonged outage, instead of simply saying “We’re working on it,” provide specifics like, “Our engineering team is currently focused on resolving a critical database issue that is impacting service availability. We anticipate full restoration within the next 4-6 hours, and we will provide an update at 2 PM PST.” This level of detail shows that progress is being made and sets clear expectations. Furthermore, proactive communication, such as offering alternative services or temporary workarounds, can help alleviate user frustration and demonstrate commitment to user satisfaction.

Sample FAQ Document for Major Service Disruption

A well-organized FAQ document can significantly reduce the volume of individual inquiries and direct users to helpful information quickly. The FAQ should be easily accessible on the website and social media platforms.

- What is happening? A global service interruption is currently affecting [service name]. Our engineering team is working diligently to restore full functionality as quickly as possible.

- What caused the outage? [Clearly and concisely explain the root cause, without technical jargon. If unknown, state that the investigation is ongoing.]

- When will service be restored? We are currently estimating a restoration time of [time/date]. We will provide updates every [frequency] on our website and social media channels.

- What can I do while the service is down? [Suggest alternative solutions or resources if applicable. For example, “You can access our help center for troubleshooting tips.”]

- Will I lose any data? [Provide a definitive answer, if possible. If uncertain, state that data loss is being investigated.]

- How will I be notified when service is restored? We will announce restoration via our website, social media channels, and email notifications (for registered users).

- Who can I contact for support? [Provide contact information, if appropriate. Consider directing users to a dedicated support channel to manage the volume of inquiries.]

Alternative Solutions and Contingency Plans

Source: cnbctv18.com

A global outage of a service like Kami highlights the critical need for robust backup systems and well-defined contingency plans. Preventing future disruptions requires a multi-faceted approach, encompassing both technological solutions and strategic planning to ensure business continuity and minimize user impact. Investing in these areas isn’t just about preventing downtime; it’s about maintaining trust and demonstrating a commitment to reliable service.

The core strategy revolves around redundancy and failover mechanisms. Simply put, having multiple systems in place that can seamlessly take over if one fails is crucial. This extends beyond just having backup servers; it includes redundant network infrastructure, data centers in geographically diverse locations, and even alternative service delivery methods.

Redundant Systems and Failover Mechanisms

Implementing redundant systems involves creating multiple independent copies of critical components. This means having duplicate servers, databases, and network equipment, all capable of handling the full workload. If one system fails, another automatically takes over, ensuring uninterrupted service. A key aspect is the failover mechanism – the automated process that switches traffic from the failed system to the backup. This needs to be fast, reliable, and thoroughly tested to minimize downtime during a real-world event. For example, a company might have its primary data center in New York and a fully functional backup in London. If the New York center experiences a power outage, the system automatically redirects traffic to London, ensuring minimal disruption to users.

Geographic Distribution and Disaster Recovery

Distributing infrastructure across multiple geographic locations is a powerful strategy to mitigate the impact of localized disasters. This minimizes the risk of a single event, such as an earthquake or hurricane, taking down the entire system. Amazon Web Services (AWS), for instance, utilizes a vast network of data centers around the globe, allowing for regional failover and enhanced resilience. The key here is to strategically select locations that are far enough apart to avoid being affected by the same disaster but still offer low latency for users.

Contingency Plan Activation Flowchart

The following describes a flowchart illustrating the steps involved in activating a contingency plan during a large-scale failure. Imagine a scenario where a major database failure is detected:

1. Failure Detection: Automated monitoring systems detect a critical failure in the primary database.

2. Alert Trigger: An immediate alert is sent to the operations team via SMS, email, and internal communication systems.

3. Initial Assessment: The team assesses the severity and scope of the failure, confirming it’s not a temporary glitch.

4. Failover Initiation: The team initiates the automated failover to the redundant database system.

5. Service Restoration Monitoring: The team closely monitors the backup system to ensure service restoration and stability.

6. User Communication: Updates are sent to users via website, social media, and email, explaining the situation and estimated restoration time.

7. Root Cause Analysis: After service is restored, a thorough investigation is launched to determine the root cause of the failure and implement preventative measures.

8. System Restoration: The primary database is restored and thoroughly tested before being brought back online.

Future Improvements and Resilience

Source: macrumors.com

The recent global outage highlighted critical vulnerabilities in our system architecture. While immediate responses focused on mitigation and restoration, a long-term strategy focused on preventing future incidents is paramount. This requires a multi-pronged approach encompassing technological upgrades, robust testing protocols, and a renewed focus on system resilience. Ignoring these lessons learned would be a recipe for repeating the same costly mistakes.

Building a more resilient system isn’t just about adding more servers; it’s about a fundamental shift in how we design, deploy, and maintain our infrastructure. This involves a holistic approach that considers every aspect of the system, from individual components to the overall architecture. The goal is to create a system that can gracefully handle unexpected events, minimizing disruption to users and maintaining a high level of service availability.

System Architecture Enhancements

Improving the system’s resilience requires a comprehensive review and upgrade of its architecture. This involves moving beyond simple redundancy and implementing more sophisticated strategies like active-active clustering, geographically distributed data centers with automatic failover, and advanced load balancing algorithms. For instance, instead of relying on a single point of failure for critical services, we can distribute these services across multiple data centers, each capable of handling the full load. If one data center experiences an outage, the system seamlessly redirects traffic to the others, ensuring uninterrupted service. This requires significant investment in infrastructure and network connectivity, but the cost is far outweighed by the potential losses associated with another global outage.

Technological Upgrades

Several specific technological upgrades can significantly improve service reliability and stability. These include implementing advanced monitoring and alerting systems that provide real-time insights into system performance and potential issues. This allows for proactive intervention, preventing minor problems from escalating into major outages. Furthermore, incorporating self-healing capabilities into the system allows for automatic recovery from certain types of failures, minimizing downtime. For example, if a server crashes, the system can automatically restart it or redirect traffic to other available servers without human intervention. Investing in cutting-edge technologies like AI-powered anomaly detection can also help identify and address potential problems before they impact users.

Comprehensive Maintenance Plan

Regular system testing and maintenance are crucial for preventing major disruptions. A comprehensive maintenance plan should include the following key aspects:

- Regular Backups: Frequent, automated backups of all critical data, ensuring rapid recovery in case of data loss.

- Automated Monitoring: Real-time monitoring of system performance, resource utilization, and potential issues, with automated alerts for critical events.

- Stress Testing: Regular stress tests to simulate high-load scenarios and identify bottlenecks or vulnerabilities.

- Security Audits: Regular security audits to identify and address potential security vulnerabilities that could lead to outages or data breaches.

- Software Updates: Timely implementation of software updates and patches to address bugs and security vulnerabilities.

- Disaster Recovery Drills: Regular disaster recovery drills to test the effectiveness of the disaster recovery plan and identify areas for improvement.

A proactive approach to maintenance, encompassing these key areas, will significantly reduce the risk of future outages and enhance the overall resilience of the system. The investment in these preventative measures is a small price to pay compared to the potential costs of another widespread service disruption.

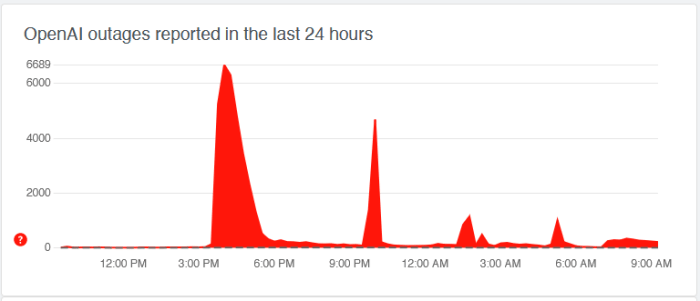

Visual Representation of the Outage

Source: co.za

A global outage of a service like Kami requires a clear and concise visual representation to effectively communicate the extent and impact of the disruption. This is crucial for both internal troubleshooting and external user communication. The visual tools employed should be intuitive and readily understandable, even for those unfamiliar with technical details.

Visualizing a global outage involves several key components, primarily focusing on a geographical representation of affected areas and a timeline of the event.

Global Outage Map

A global outage map would ideally use a world map as its base. Affected regions would be highlighted using a color-coding system to represent the severity of the disruption. For instance, a light red could indicate minor service degradation, while a deep red would signify a complete outage. The intensity of the color could be tied to the percentage of users experiencing issues within a specific region. Data points could be aggregated at various geographical levels—countries, states, or even cities—depending on the available data granularity. The map should be interactive, allowing users to zoom in and out and obtain more detailed information about specific regions. A legend clearly explaining the color-coding scheme is essential for proper interpretation.

Outage Timeline Infographic

An infographic detailing the outage timeline would utilize a horizontal timeline to visually represent the sequence of events. Key milestones, such as the initial detection of the outage, peak impact, commencement of mitigation efforts, and full service restoration, would be marked with distinct icons and labels. A bar chart could visually compare the duration of the outage in different regions. The infographic could also include icons representing key contributing factors or significant events, such as system failures or network disruptions. For example, a broken server icon could represent a server crash, while a lightning bolt could represent a power outage. The use of clear and concise labels, along with a visually appealing design, would ensure easy understanding and rapid comprehension of the event’s progression. A simple, clean design is crucial to avoid overwhelming the viewer with unnecessary detail. The use of a consistent color scheme throughout the infographic enhances visual coherence.

Last Recap

The two-day global outage served as a stark reminder of our interconnectedness and our vulnerability to large-scale digital disruptions. While the immediate impact was felt across numerous sectors, the long-term consequences could be far-reaching. The incident underscores the need for proactive measures to prevent future occurrences, including investing in robust infrastructure, developing comprehensive contingency plans, and fostering greater transparency in communicating with users during service disruptions. Ultimately, the experience provides a valuable case study for how to build a more resilient digital future, one that’s better prepared for the inevitable challenges that lie ahead.