Chatgpt crawler vulnerability – Kami crawler vulnerability is a serious concern, exposing the hidden dangers lurking within the seemingly innocuous act of web crawling. Imagine a digital spider, tirelessly scouring the internet, inadvertently stumbling upon sensitive information or overloading systems. This isn’t science fiction; it’s a real threat, demanding a closer look at the vulnerabilities in how crawlers interact with large language models and the potential consequences.

This vulnerability stems from the intersection of powerful AI models and the ever-present web crawlers. The way these crawlers access and process data from these models creates a number of potential security risks, from data breaches and denial-of-service attacks to ethical concerns about data usage. Understanding the architecture of these crawlers, how they interact with APIs, and the potential points of failure is crucial in mitigating these risks.

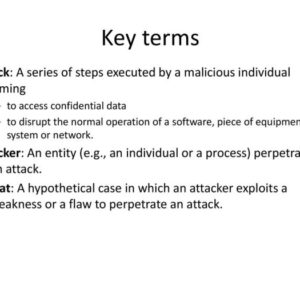

Understanding the Nature of Crawlers and their Interaction with Large Language Models

Source: tech-recipes.com

Web crawlers, the tireless digital spiders of the internet, are constantly scouring the web, collecting data that fuels everything from search engines to market research. Their interaction with Large Language Models (LLMs), however, presents a fascinating and increasingly complex relationship, rife with both opportunity and vulnerability. Understanding this interplay is crucial in navigating the ever-evolving landscape of AI and data collection.

The typical architecture of a web crawler involves several key components working in concert. First, a seed URL is provided, acting as the starting point for the crawl. From there, the crawler follows links found on the initial page, adding new URLs to its queue. A scheduler manages this queue, prioritizing URLs based on various factors, like relevance or freshness. The crawler then fetches the content of each URL, often using a web client library to handle HTTP requests. Finally, a parser extracts relevant data from the fetched content, storing it in a database or other storage mechanism. This process continues iteratively, expanding outward from the initial seed URL.

Crawler Interaction with LLM APIs

A crawler might interact with an LLM API by submitting text queries or prompts to the API and receiving structured or unstructured text responses in return. Imagine a crawler designed to gather information on a specific topic. Instead of simply extracting text from websites, it could formulate queries based on the content it finds and use an LLM API to summarize, translate, or even generate new content based on the information it has gathered. For instance, a crawler could extract information about historical events from various sources, then use an LLM to generate a concise and coherent summary, or even a fictionalized narrative based on those events. This interaction opens up new possibilities for data processing and analysis.

Potential Points of Failure and Vulnerability

The interaction between crawlers and LLM APIs is not without its challenges. One significant vulnerability is the potential for rate limiting. LLM APIs often impose usage limits to prevent abuse or overload. A poorly designed crawler could easily exceed these limits, resulting in its requests being blocked or throttled. Furthermore, the accuracy and reliability of the LLM responses themselves pose a risk. LLMs are prone to hallucinations—generating incorrect or nonsensical information. A crawler relying heavily on LLM outputs could inadvertently propagate these errors, leading to inaccurate or misleading data. Finally, security vulnerabilities in the API itself or in the crawler’s implementation could expose sensitive data or allow malicious actors to manipulate the system. Proper authentication, authorization, and input validation are crucial to mitigating these risks.

Comparison of Different Crawler Types and Their Impact

Different types of web crawlers exist, each with its own strengths and weaknesses, and each impacting LLMs differently. Focused crawlers, designed to target specific websites or types of content, are more efficient but might miss broader contextual information. General-purpose crawlers, on the other hand, cast a wider net, potentially overwhelming the LLM API with irrelevant data. Furthermore, the sophistication of the crawler can greatly affect the quality of data presented to the LLM. A sophisticated crawler might use natural language processing techniques to pre-process the data, improving the quality of the LLM’s output. Conversely, a simplistic crawler might flood the LLM with raw, unprocessed data, leading to less effective results. The choice of crawler type directly impacts the efficiency and accuracy of the LLM-powered data processing pipeline.

Identifying Potential Vulnerabilities in Data Retrieval

Scraping data from a large language model (LLM) like Kami isn’t as simple as it sounds. It’s a delicate dance between accessing valuable information and potentially triggering mechanisms designed to protect the system. Understanding the vulnerabilities inherent in this process is crucial for both developers and users alike. Ignoring these risks can lead to anything from temporary service disruptions to complete system crashes.

The seemingly straightforward act of retrieving data from an LLM API opens a Pandora’s Box of potential problems. Rate limiting, data overload, and denial-of-service (DoS) attacks are just a few of the challenges that need to be carefully addressed. The sheer volume of data involved, combined with the complex nature of LLMs, creates a unique set of security concerns that require a multi-faceted approach to mitigation.

Rate Limiting and API Abuse

LLM APIs often employ rate limiting to prevent abuse and ensure fair access for all users. Exceeding these limits, whether intentionally or unintentionally, can result in temporary or permanent bans. Intentional abuse, such as creating botnets to flood the API with requests, is a serious concern. This can cripple the service for legitimate users. Unintentional abuse can also occur due to poorly designed crawlers that don’t respect rate limits or handle errors gracefully. For example, a crawler might send repeated requests without implementing proper backoff mechanisms after encountering errors, leading to exceeding the API’s rate limits.

Risks Associated with Handling Large Data Volumes

Retrieving massive datasets from an LLM API presents significant challenges. Efficient storage and processing of this data are essential. Failure to properly handle these large volumes can lead to system instability, data corruption, and even crashes. Imagine a crawler attempting to download the entire corpus of an LLM’s knowledge base. The sheer size of this data could overwhelm even the most robust systems. Effective strategies for data compression, efficient database management, and parallel processing are crucial to mitigate these risks. For instance, using a distributed database system can help manage the data more effectively, preventing single points of failure and improving scalability.

Detecting and Mitigating Denial-of-Service Attacks

Denial-of-service (DoS) attacks aim to make an online service unavailable to its intended users. In the context of an LLM API, a DoS attack could involve a flood of requests designed to overwhelm the system’s capacity. Detecting these attacks requires careful monitoring of API usage patterns. Identifying sudden spikes in requests from a single IP address or a range of IP addresses could indicate a DoS attack. Mitigation strategies include implementing rate limiting, using firewalls to block malicious traffic, and employing distributed denial-of-service (DDoS) mitigation services.

API Usage Monitoring and Anomalous Behavior Identification

Proactive monitoring of API usage is critical for identifying potential vulnerabilities before they cause significant problems. A robust monitoring system should track key metrics such as the number of requests per second, the average response time, and the error rate. Anomalous behavior, such as a sudden surge in requests from an unusual source or a significant increase in error rates, should trigger alerts. Machine learning algorithms can be employed to analyze historical data and identify patterns indicative of malicious activity. This allows for early detection and response to potential threats, preventing widespread disruption. For example, setting up alerts that trigger when the number of requests from a single IP address exceeds a predefined threshold, or when the error rate surpasses a certain percentage, can help identify potential problems early on.

Security Risks Associated with Data Exposure

Source: ctfassets.net

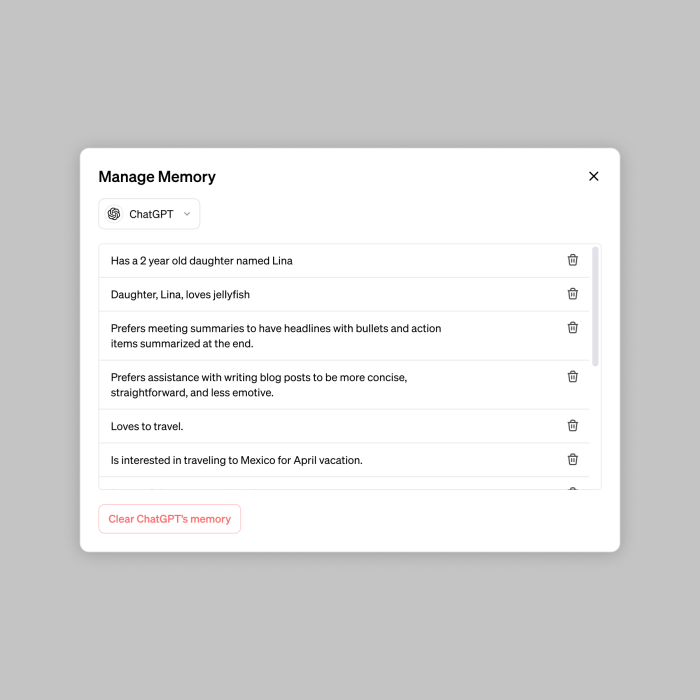

The seemingly innocuous act of a crawler accessing and processing data generated by a large language model (LLM) harbors significant security risks. Exposing this generated text, even unintentionally, can lead to severe data breaches and reputational damage, impacting both the LLM provider and its users. The potential for sensitive information leakage necessitates a robust security framework.

The nature of LLMs, which often process and generate text based on vast datasets, inherently increases the risk of data exposure. Crawlers, designed to index and extract information, can inadvertently access and replicate sensitive data if proper security measures aren’t in place. This exposure is particularly concerning when dealing with personally identifiable information (PII), financial data, intellectual property, or confidential business strategies.

Sensitive Information Leakage Examples

Several scenarios illustrate how sensitive information could be inadvertently leaked. Imagine a crawler accessing a publicly available API endpoint that returns LLM-generated summaries of customer support tickets. If these summaries contain PII like names, addresses, or account numbers, the crawler could easily collect and index this data, making it accessible to malicious actors. Similarly, a crawler scraping a website displaying LLM-generated research findings could inadvertently capture confidential business plans or intellectual property embedded within the text. In another scenario, a crawler accessing a publicly accessible database of LLM-generated medical diagnoses could expose patient health information. These examples highlight the importance of stringent data sanitization and access control mechanisms.

Data Sanitization and Security Protocols

Data sanitization is paramount to mitigating these risks. This involves implementing processes to remove or redact sensitive information before the generated text is exposed to external access. This might include techniques like data masking, where sensitive data is replaced with placeholder values, or de-identification, where identifying information is removed entirely. Beyond sanitization, robust security protocols are crucial. This includes implementing access control lists (ACLs) to restrict access to sensitive data, employing encryption to protect data in transit and at rest, and implementing regular security audits to identify and address vulnerabilities. Furthermore, employing robust rate limiting and intrusion detection systems can help prevent malicious crawlers from overwhelming the system or accessing data through unauthorized means.

Security Measures Checklist

A comprehensive security strategy requires a multi-layered approach. The following checklist Artikels key security measures to protect against data breaches:

- Implement robust authentication and authorization mechanisms to control access to LLM-generated data.

- Employ data loss prevention (DLP) tools to monitor and prevent sensitive data from leaving the system.

- Regularly update and patch software and infrastructure to address known vulnerabilities.

- Implement strong encryption protocols to protect data both in transit and at rest.

- Conduct regular security audits and penetration testing to identify and mitigate potential weaknesses.

- Establish clear data governance policies and procedures to ensure responsible data handling.

- Train employees on data security best practices and the importance of responsible data handling.

- Implement robust logging and monitoring systems to track access to LLM-generated data and detect suspicious activity.

- Use input validation and sanitization techniques to prevent malicious data from entering the system.

- Consider employing techniques like differential privacy to minimize the risk of re-identification of individuals from aggregated data.

Impact of Crawlers on System Performance and Stability

Imagine a stampede of virtual elephants – that’s what high-volume crawling can feel like for a large language model (LLM) system. The sheer number of requests can overwhelm resources, leading to sluggish performance and even complete system crashes. Understanding this impact is crucial for building robust and reliable LLMs.

The potential for performance degradation is significant. Each crawler request consumes system resources – CPU cycles, memory, network bandwidth, and database queries. A surge in requests, especially if poorly managed, can quickly exhaust these resources, leading to increased latency, slower response times, and ultimately, system instability. Think of it like a restaurant suddenly inundated with orders – the kitchen staff (system resources) can only handle so much before things start to fall apart. In the context of an LLM, this translates to users experiencing delayed or incomplete responses, or even complete service outages.

Optimizing API Responses to Reduce System Load

Efficient API response management is key to mitigating the performance impact of crawlers. Techniques such as caching frequently accessed data can significantly reduce the load on the backend system. Imagine a library – instead of constantly searching for the same book, you can keep popular titles readily available. Similarly, caching frequently requested information reduces the computational burden on the LLM. Another strategy involves implementing rate limiting – controlling the number of requests a single crawler can make within a specific time frame. This prevents any single crawler from monopolizing system resources. Furthermore, compressing API responses can reduce the amount of data transferred, freeing up network bandwidth. Finally, carefully designing API endpoints to minimize unnecessary processing steps can also contribute to a more efficient system.

Resource Allocation and Capacity Planning

Effective resource allocation and capacity planning are vital for maintaining system stability under heavy crawler traffic. This involves accurately forecasting the expected load based on historical data and anticipated growth. It’s like planning a concert – you need to ensure you have enough seating, security personnel, and concessions to accommodate the expected crowd. Similarly, LLMs need sufficient hardware resources – CPUs, memory, storage, and network bandwidth – to handle anticipated traffic. Over-provisioning resources can prevent performance bottlenecks during peak loads, ensuring a smooth user experience even during periods of high crawler activity. Under-provisioning, however, can lead to performance degradation and system instability. Regular monitoring and performance testing are essential to fine-tune resource allocation and ensure the system can handle the anticipated load.

Strategies for Managing Crawler Traffic and Ensuring System Stability

Several strategies can be employed to manage crawler traffic and maintain system stability. These strategies range from simple techniques to more sophisticated approaches. The optimal approach depends on the specific characteristics of the LLM system and the anticipated crawler load.

| Strategy | Description | Pros | Cons |

|---|---|---|---|

| Rate Limiting | Restricting the number of requests per crawler within a given time period. | Simple to implement, effective in controlling resource consumption. | Can be circumvented by sophisticated crawlers, may impact legitimate users. |

| IP Blocking | Blocking requests from known malicious or abusive IP addresses. | Effective against simple attacks, easy to implement. | Can be circumvented by using proxies or rotating IPs, requires constant updating of the blacklist. |

| CAPTCHA Implementation | Requiring users (including crawlers) to solve CAPTCHAs to verify they are not bots. | Effective against less sophisticated crawlers. | Can be annoying for legitimate users, some CAPTCHAs can be automated. |

| API Key Authentication | Requiring API keys for access, allowing for granular control over access and usage. | Allows for detailed monitoring and control of access, enables rate limiting per key. | More complex to implement, requires careful management of API keys. |

Mitigation Strategies and Best Practices

Protecting your large language model (LLM) from the relentless crawl of data-hungry bots requires a multi-layered defense strategy. Think of it like fortifying a castle – you need strong walls, vigilant guards, and clever traps to keep unwanted visitors out. This section Artikels key strategies for bolstering your LLM’s defenses against unauthorized crawling.

Implementing robust security measures is crucial for safeguarding your valuable data and maintaining the integrity of your system. Ignoring these precautions could lead to data breaches, performance degradation, and reputational damage. A proactive approach, incorporating several layers of protection, is the most effective way to mitigate these risks.

Access Control Mechanisms and Authentication Protocols

Implementing stringent access control mechanisms is the first line of defense. This involves carefully managing who can access your LLM’s data and what actions they’re permitted to perform. Robust authentication protocols, such as OAuth 2.0 or OpenID Connect, ensure that only authorized users or applications can interact with your system. These protocols use tokens and cryptographic signatures to verify the identity of the requester, preventing unauthorized access. For example, requiring API keys for all requests and regularly rotating these keys minimizes the impact of compromised keys. Additionally, granular permission levels allow you to restrict access to specific data sets or functionalities based on user roles.

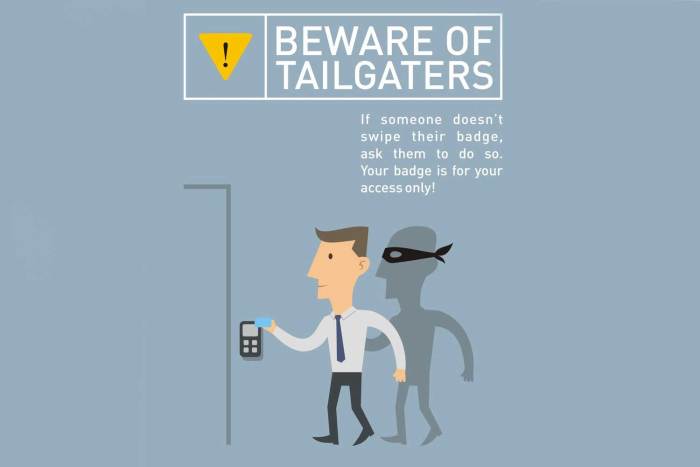

Bot Detection and Mitigation

CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) remains a widely used method for distinguishing between legitimate users and bots. However, sophisticated bots are increasingly adept at bypassing CAPTCHAs. Therefore, a multi-faceted approach is necessary. This includes using more advanced bot detection techniques, such as analyzing user behavior patterns (e.g., request frequency, IP address, user agent), employing honeypots (decoy data points to attract and identify bots), and leveraging third-party bot mitigation services that use machine learning to identify and block malicious traffic. For instance, a sudden surge in requests from a single IP address could trigger an alert, indicating a potential bot attack.

Robust Error Handling and Exception Management

Unexpected errors and exceptions are inevitable. However, how your system handles these situations can significantly impact its security. Poor error handling can expose sensitive information, such as database structures or internal error messages, that could be exploited by malicious crawlers. Robust error handling involves gracefully handling exceptions, returning generic error messages to the user, and logging detailed error information for debugging purposes without revealing sensitive data. For example, instead of returning a detailed stack trace, a generic “Internal Server Error” message should be presented to the user while the detailed log is stored securely.

API Design Best Practices

The design of your API significantly influences its vulnerability to crawling. Minimizing the amount of data exposed in each API response is crucial. Avoid returning unnecessary information, and implement rate limiting to control the number of requests from a single source within a specific timeframe. This helps prevent bots from overwhelming your system. Furthermore, designing your API to be stateless, meaning each request contains all the necessary information, reduces the risk of attacks that exploit session management vulnerabilities. For example, limiting the number of requests per minute from a single IP address to 100 prevents a bot from making thousands of requests in a short period.

Analyzing the Ethical Implications: Chatgpt Crawler Vulnerability

The ethical landscape surrounding AI-powered content generation and the crawlers that access it is complex and rapidly evolving. The potential for both good and harm is immense, demanding careful consideration of the implications before deploying such technologies. The unchecked use of crawlers can lead to serious ethical breaches, impacting individual privacy, intellectual property rights, and the overall integrity of information online.

The use of crawlers to harvest data from large language models raises several key ethical concerns. One significant worry revolves around the potential for misuse of the gathered information, while another centers on the inherent biases that can be amplified through such processes. Responsible data usage and ethical considerations must be at the forefront of any crawler design and deployment.

Potential Misuse of Retrieved Data and its Consequences, Chatgpt crawler vulnerability

The data retrieved by crawlers from LLMs isn’t just numbers and text; it often reflects patterns, biases, and even sensitive personal information. Malicious actors could exploit this data for various nefarious purposes. For instance, scraped data could be used to create deepfakes, spread misinformation on a massive scale, or even facilitate targeted phishing campaigns. The consequences could range from reputational damage to significant financial losses and even endangerment of individuals. Imagine a scenario where a crawler harvests data containing private medical information from a compromised LLM – the potential for identity theft and privacy violation is immense. This underscores the need for stringent security measures and ethical guidelines around data retrieval and usage.

Potential Biases in the Data Retrieved and their Impact

LLMs are trained on vast datasets, and these datasets often reflect existing societal biases. Crawlers accessing and processing this data risk amplifying and perpetuating these biases. For example, if an LLM’s training data overrepresents a particular demographic, a crawler harvesting that data will inevitably reflect this skewed representation. This biased data can then be used to create discriminatory algorithms or perpetuate harmful stereotypes in various applications, from loan applications to hiring processes. The impact of these biases can be profound and far-reaching, leading to unfair or unjust outcomes for certain groups. Addressing bias requires careful consideration of the source data and implementing strategies to mitigate the propagation of skewed information.

The Importance of Responsible Data Usage and Ethical Considerations in Crawler Design

Responsible data usage is paramount. Crawler developers must prioritize ethical considerations at every stage of the design process. This includes obtaining informed consent where appropriate, implementing robust data anonymization techniques, and ensuring transparency in data collection and usage practices. Building ethical safeguards into crawlers is not merely a matter of compliance but a crucial step towards fostering trust and preventing potential harm. This could involve incorporating mechanisms to detect and filter biased content, implementing data usage restrictions, and establishing clear accountability frameworks for the use of retrieved data. Ultimately, the responsible development and deployment of crawlers are vital for maintaining the integrity of information online and promoting fairness and equity.

Illustrative Examples of Exploits and Defenses

Let’s ditch the abstract and dive into some real-world (hypothetical, but realistic!) scenarios to illustrate how crawler vulnerabilities can be exploited and, more importantly, how to defend against them. Imagine the potential damage—data breaches, system crashes, and reputational nightmares—and you’ll see why robust security is paramount.

Imagine a scenario where a malicious actor crafts a sophisticated crawler designed to target a large language model (LLM) API. This isn’t your average web crawler; this one is weaponized.

Hypothetical Crawler Exploit Scenario

This malicious crawler leverages a rate-limiting vulnerability. The LLM API, while designed to handle a certain number of requests per second, has a flaw: it doesn’t effectively identify and block bursts of requests from a single IP address or a coordinated group of IPs. The attacker exploits this by sending a massive flood of requests, overwhelming the API’s capacity. The flood isn’t just random noise; it’s carefully structured to extract specific, sensitive information. The attacker might target data associated with specific users, confidential internal documents that the LLM has processed, or even the LLM’s internal training data. This denial-of-service (DoS) attack cripples the API, making it unavailable to legitimate users, while simultaneously exfiltrating valuable data. The impact extends beyond simple unavailability; the data breach could lead to significant financial losses, legal repercussions, and a severe erosion of public trust.

Attack Vector and Impact Analysis

The attack vector is a cleverly crafted crawler that exploits the rate-limiting vulnerability. The crawler uses techniques like rotating IP addresses, employing proxies, and staggering request timing to avoid detection. The impact is two-fold: a denial-of-service attack that renders the API unusable, and a data breach that exposes sensitive information. The attacker gains unauthorized access to proprietary data, potentially leading to significant financial losses, reputational damage, and legal consequences. For example, imagine if a medical LLM’s patient data were exposed – the ramifications would be substantial.

Countermeasures to Prevent the Exploit

To prevent such an exploit, several countermeasures could be implemented. These include robust rate limiting, IP address blocking, and advanced threat detection.

The importance of these countermeasures cannot be overstated. A multi-layered approach is key to effective defense.

- Implement robust rate limiting: Instead of a simple count of requests per second, use a more sophisticated algorithm that considers factors like request patterns, IP reputation, and the overall load on the system. This allows for legitimate bursts of activity while blocking malicious floods.

- Employ IP address blocking: Identify and block IP addresses exhibiting suspicious activity, such as a sudden surge in requests or requests that consistently exceed the defined rate limits. Consider using IP reputation databases to enhance this process.

- Utilize advanced threat detection: Implement intrusion detection and prevention systems (IDPS) to monitor API traffic for anomalous patterns indicative of malicious activity. Machine learning models can be trained to identify subtle patterns that human analysts might miss.

- Implement CAPTCHAs or other verification mechanisms: For sensitive operations or when unusual activity is detected, require users to complete CAPTCHAs or other verification steps to confirm they are legitimate users.

Effectiveness of Countermeasures

Let’s see how these countermeasures would work in practice against the hypothetical attack.

- Step 1: Rate Limiting Enhancement: The API’s rate limiting is upgraded to use a token bucket algorithm, allowing a certain number of requests within a time window but penalizing excessive bursts. This reduces the effectiveness of the flood attack.

- Step 2: IP Address Blocking: The system detects a surge of requests from a specific IP address range. These IPs are temporarily blocked, effectively halting the attack from that source.

- Step 3: Threat Detection: The IDPS identifies the unusual pattern of requests—the rapid, repetitive nature of the requests—as malicious. It triggers an alert, and the system administrators can investigate and take further action.

- Step 4: CAPTCHA Implementation: For high-risk operations, CAPTCHAs are implemented, forcing the crawler to solve puzzles, effectively slowing down or halting the attack.

Final Summary

Source: futurecdn.net

The potential for exploitation through Kami crawler vulnerabilities highlights the urgent need for robust security measures and ethical considerations. From implementing strict access controls and bot detection to designing APIs with security in mind, proactive measures are essential. Ignoring this vulnerability isn’t an option; it’s a race against increasingly sophisticated attacks, demanding a constant evolution of defensive strategies and a commitment to responsible data handling.