AI Cybersecurity Collaboration Playbook: In a world increasingly reliant on digital infrastructure, the need for robust cybersecurity is paramount. This playbook dives into the crucial collaboration between AI, industry experts, and governments to combat evolving cyber threats. We’ll explore how AI-powered tools enhance threat detection and prevention, while also addressing the ethical and legal implications of this powerful technology. Get ready to navigate the complex landscape of AI in cybersecurity.

From defining the scope of collaboration to implementing AI-driven security solutions and building a sustainable ecosystem, we’ll unpack the strategies, best practices, and challenges involved. We’ll analyze successful collaborations, examine case studies, and highlight key performance indicators for measuring success. This isn’t just a guide; it’s a roadmap to a safer digital future.

Defining the Scope of AI Cybersecurity Collaboration

Source: digicert.com

The digital world is a battlefield, and the weapons are increasingly sophisticated. Cybersecurity is no longer a simple game of firewalls and antivirus software; it’s a complex dance between attackers and defenders, a constant arms race fueled by innovation. Artificial intelligence (AI) is playing a pivotal role on both sides, creating both unprecedented opportunities and daunting challenges. This playbook explores the crucial need for collaboration in navigating this evolving landscape.

AI is rapidly transforming cybersecurity. On the defensive side, AI-powered tools are used for threat detection, incident response, and vulnerability management. On the offensive side, AI is being weaponized to create more sophisticated and adaptive malware, making attacks harder to detect and respond to. This duality necessitates a collaborative approach to ensure effective defense.

Key Challenges in AI-Driven Cybersecurity Collaborations

Several hurdles impede effective collaboration in AI-driven cybersecurity. Data sharing remains a significant obstacle; organizations are often hesitant to share sensitive information due to privacy concerns and competitive pressures. Furthermore, the lack of standardized data formats and APIs hinders interoperability between different AI security tools and platforms. Finally, the rapid pace of technological advancement requires constant adaptation and upskilling, demanding significant investment and resources from all stakeholders. A coordinated effort is needed to overcome these challenges.

Benefits of Collaborative Approaches to AI Cybersecurity

Collaboration offers numerous advantages. Shared threat intelligence allows organizations to proactively identify and mitigate risks, reducing the overall impact of cyberattacks. Pooling resources, including expertise and technology, enhances the capacity to develop and deploy more robust AI-based security solutions. Collaboration fosters innovation, leading to the development of new and improved security tools and techniques. This collective approach can significantly improve the overall cybersecurity posture of the digital ecosystem.

Stakeholders Involved in AI Cybersecurity Collaboration

Effective AI cybersecurity necessitates a multi-faceted approach involving various stakeholders. Governments play a critical role in setting standards, regulations, and promoting information sharing initiatives. Industry players, including technology companies, cybersecurity firms, and businesses across various sectors, contribute by developing and deploying AI-powered security solutions and sharing threat intelligence. Academia plays a vital role in conducting research, educating the next generation of cybersecurity professionals, and developing innovative AI-based security technologies. Finally, individual users must be educated about cybersecurity best practices to minimize their risk.

Types of AI Cybersecurity Threats and Collaborative Solutions

| Threat Type | Description | Collaborative Solution | Stakeholder Roles |

|---|---|---|---|

| AI-powered Malware | Malware using AI to evade detection and adapt to defenses. | Shared threat intelligence platforms, collaborative malware analysis, development of AI-based anti-malware solutions. | Industry (security firms, tech companies), Government (regulation, standards), Academia (research, education) |

| Deepfakes and Social Engineering | AI-generated fake videos/audio used for phishing and disinformation campaigns. | Development of AI-based detection tools, public awareness campaigns, collaboration on media literacy initiatives. | Industry (tech companies, social media platforms), Government (regulation, public awareness), Academia (research, education) |

| AI-driven DDoS Attacks | Distributed denial-of-service attacks amplified by AI-controlled botnets. | Improved network security infrastructure, development of AI-based DDoS mitigation systems, information sharing on attack patterns. | Industry (telecom companies, cloud providers), Government (critical infrastructure protection), Academia (research, development) |

| Data Breaches exploiting AI vulnerabilities | Attacks targeting AI systems to steal sensitive data or manipulate AI algorithms. | Development of secure AI frameworks, robust data encryption methods, collaboration on vulnerability disclosure and patching. | Industry (tech companies, security firms), Government (data protection regulations), Academia (research, secure AI development) |

Developing a Collaborative Framework

Source: technogiq.com

Building a robust AI cybersecurity collaboration requires a well-defined framework, moving beyond simple information sharing to a coordinated, proactive defense. This framework should be adaptable, allowing for adjustments based on emerging threats and technological advancements. Think of it as a living document, constantly evolving to meet the ever-changing landscape of cyber threats.

A phased implementation plan is crucial for successful adoption. This ensures a smooth transition and minimizes disruption to existing security operations. Starting small and scaling gradually allows organizations to learn and adapt their approach along the way, maximizing the benefits of the collaboration.

Phased Implementation Plan for AI Cybersecurity Collaboration

The implementation should be broken down into manageable phases, starting with a pilot program involving a small group of participants. This allows for testing and refinement of processes before a full-scale rollout. Subsequent phases could focus on expanding participation, integrating new AI tools, and refining threat intelligence sharing protocols. A typical three-phase approach might include: Phase 1: Pilot program with key stakeholders; Phase 2: Expansion to include a wider range of organizations and AI tools; Phase 3: Full integration and continuous improvement. Each phase should have clearly defined goals, timelines, and metrics for success.

Communication Channels and Protocols

Effective communication is the bedrock of any successful collaboration. Establishing clear communication channels and protocols is essential for timely information sharing and coordinated responses to security incidents. This includes defining communication methods (e.g., secure messaging platforms, video conferencing, regular meetings), escalation procedures for critical events, and roles and responsibilities for communication management. For instance, a dedicated communication team could be responsible for disseminating threat intelligence and coordinating responses to incidents. Regularly scheduled meetings and drills can further enhance communication effectiveness.

Examples of Successful Collaborative Projects

Several successful collaborative projects highlight the benefits of shared intelligence and coordinated efforts. For example, the Cybersecurity and Infrastructure Security Agency (CISA) in the US actively collaborates with private sector organizations to share threat intelligence and coordinate responses to major cyberattacks. This collaborative approach has proven instrumental in mitigating the impact of widespread ransomware attacks and other significant cyber incidents. Similarly, information-sharing platforms like MISP (Malware Information Sharing Platform) allow organizations to contribute and access threat intelligence, fostering a collective defense against cyber threats. These collaborative efforts demonstrate the power of shared knowledge and coordinated action in enhancing cybersecurity resilience.

Mechanisms for Information Sharing and Threat Intelligence Exchange

Secure platforms and standardized formats are crucial for effective threat intelligence exchange. This might involve using secure channels for communication, implementing standardized threat intelligence formats (like STIX and TAXII), and establishing clear procedures for data validation and verification. Consider employing a centralized threat intelligence platform to aggregate and analyze information from various sources. This allows for faster identification of emerging threats and more effective response strategies. Regular audits of the information sharing process ensure the ongoing integrity and security of the system.

Key Performance Indicators (KPIs) for Collaboration Effectiveness

Measuring the success of the collaboration requires carefully selected KPIs. These should reflect the improvement in overall cybersecurity posture and the efficiency of the collaborative effort. Key metrics could include: reduced mean time to detect (MTTD) and mean time to respond (MTTR) to security incidents, decreased number of successful cyberattacks, improved accuracy of threat intelligence, and increased efficiency of security operations. Regular monitoring and analysis of these KPIs provide valuable insights into the effectiveness of the collaboration and identify areas for improvement. For example, tracking the number of threats identified and mitigated through collaborative efforts can demonstrate the tangible benefits of the initiative.

Implementing AI-Powered Security Tools and Techniques

AI is no longer a futuristic fantasy in cybersecurity; it’s a crucial tool for navigating the increasingly complex threat landscape. This section dives into the practical application of AI-powered security tools and techniques, highlighting their roles in threat detection, prevention, and incident response, and outlining the process of integrating these solutions into existing infrastructures.

AI’s role in bolstering cybersecurity defenses is multifaceted. It offers the potential to automate tasks, analyze vast datasets far exceeding human capabilities, and identify subtle patterns indicative of malicious activity that might otherwise go unnoticed. This translates to faster response times, reduced operational costs, and improved overall security posture.

AI in Threat Detection and Prevention, Ai cybersecurity collaboration playbook

AI algorithms, particularly machine learning models, excel at identifying anomalies in network traffic, user behavior, and system logs. These algorithms are trained on massive datasets of known malicious and benign activities, enabling them to distinguish between normal and suspicious events with increasing accuracy. For instance, an AI-powered intrusion detection system (IDS) can analyze network packets to detect unusual patterns indicative of a denial-of-service attack or unauthorized access attempts. Similarly, AI-powered malware analysis tools can automatically scan files for malicious code, classifying them based on their behavior and characteristics, far surpassing the speed and capacity of manual analysis. This proactive approach significantly enhances the ability to prevent attacks before they cause damage.

Comparison of AI-Powered Security Tools

Several types of AI-powered security tools exist, each with its strengths and weaknesses. Intrusion detection systems (IDS) focus on network traffic analysis, identifying intrusions based on patterns and anomalies. Security information and event management (SIEM) systems aggregate security logs from various sources, utilizing AI to correlate events and detect threats across the entire infrastructure. Malware analysis tools leverage AI to automatically classify and analyze malicious files, identifying their behavior and potential impact. Endpoint detection and response (EDR) solutions combine AI-driven threat detection with automated response capabilities, allowing for faster containment of infections. The choice of tool depends on specific security needs and existing infrastructure. A comprehensive security strategy often involves a combination of these tools working in concert.

AI Enhancement of Incident Response Capabilities

AI significantly accelerates and improves incident response. By automating the analysis of security alerts and identifying the root cause of incidents, AI reduces the time it takes to contain and remediate threats. AI-powered systems can automatically isolate infected systems, block malicious traffic, and initiate remediation actions, minimizing the impact of attacks. Furthermore, AI can assist in post-incident analysis by identifying the attack vectors, compromised systems, and the extent of the damage. This information is crucial for improving future security measures and preventing similar incidents from occurring.

Implementing AI-Driven Security Solutions

Integrating AI-driven security solutions requires a phased approach. The first step involves assessing the existing security infrastructure and identifying areas where AI can provide the most significant benefit. This assessment should consider the organization’s specific security needs, risk profile, and available resources. Next, select appropriate AI-powered security tools that align with these needs. The integration process itself involves configuring the tools, integrating them with existing systems, and training the AI models on relevant data. Continuous monitoring and refinement are crucial to ensure the effectiveness of the AI-driven security solutions. This requires skilled personnel capable of managing and interpreting the insights generated by the AI systems.

Workflow of an AI-Powered Security System

A typical workflow might look like this: The system continuously monitors network traffic, system logs, and user behavior. Anomalies are identified using AI algorithms. Suspicious activities trigger alerts. The AI system analyzes the alerts, correlating them with other events to determine the severity and potential impact. Based on this analysis, automated responses are initiated (e.g., blocking malicious traffic, isolating infected systems). Security analysts review the alerts and AI-generated insights, providing human oversight and validation. Finally, reports are generated, documenting the incidents and providing insights for improving future security measures. This process is iterative, with the AI system constantly learning and adapting to new threats.

Addressing Ethical and Legal Considerations

The integration of AI into cybersecurity presents a thrilling frontier, but one fraught with ethical and legal complexities. The very power of AI to detect and respond to threats also raises concerns about privacy, bias, and accountability. Navigating this landscape requires a proactive approach, establishing clear guidelines and frameworks to ensure responsible development and deployment.

The use of AI in cybersecurity necessitates a careful balancing act between enhanced security and the potential for misuse. The sheer volume of data processed by AI systems, coupled with their autonomous decision-making capabilities, creates unique challenges in maintaining ethical standards and adhering to existing legal frameworks. Failing to address these concerns risks eroding public trust and undermining the very benefits AI offers.

Potential Ethical Dilemmas in AI Cybersecurity

AI systems, particularly those employing machine learning, can inherit and amplify biases present in their training data. This can lead to discriminatory outcomes, such as unfairly targeting certain groups or individuals. For instance, an AI system trained on data primarily reflecting the activities of a specific demographic might misidentify legitimate actions by individuals from other groups as malicious, leading to false positives and unnecessary interventions. Furthermore, the opaque nature of some AI algorithms – often referred to as the “black box” problem – can make it difficult to understand how decisions are made, hindering accountability and transparency. The potential for autonomous weapons systems, while not directly related to cybersecurity in the traditional sense, further highlights the ethical considerations surrounding AI’s decision-making power.

Legal Implications of AI in Cybersecurity

The use of AI in cybersecurity raises significant legal questions, primarily concerning data privacy and liability. The processing of vast amounts of personal data for security purposes must comply with regulations like GDPR and CCPA. Determining liability in cases of AI-related security breaches is also a complex issue. Is the developer, the user, or the AI system itself responsible when a security incident occurs? These legal ambiguities require clarification through legislation and judicial precedent. Furthermore, international cooperation is essential to address the cross-border nature of cyber threats and the potential for conflicts of law. Consider a scenario where an AI system in one country detects and responds to a threat originating from another; establishing clear legal jurisdiction and accountability becomes crucial.

Transparency and Accountability in AI-Driven Security Systems

Transparency and accountability are paramount for building trust in AI-driven security systems. Users and stakeholders need to understand how these systems work, what data they use, and how decisions are made. This requires explainable AI (XAI) techniques that provide insights into the decision-making process. Accountability mechanisms, including audit trails and independent oversight, are essential to ensure that AI systems are used responsibly and do not violate ethical or legal standards. For example, regular audits of AI algorithms can help detect and correct biases, ensuring fairness and preventing discriminatory outcomes. Similarly, clear lines of responsibility must be established, clarifying who is accountable for the actions of an AI system and how to address any resulting harm.

Establishing Ethical Guidelines and Regulatory Frameworks

Developing robust ethical guidelines and regulatory frameworks for AI in cybersecurity is crucial. These frameworks should address issues such as data privacy, bias mitigation, transparency, accountability, and the potential for misuse. Collaboration between governments, industry stakeholders, and researchers is essential to create effective and adaptable guidelines. International cooperation is also vital to ensure consistency and prevent regulatory arbitrage. These guidelines could draw inspiration from existing ethical frameworks in other fields, such as medical ethics or environmental regulations, adapting them to the unique challenges posed by AI in cybersecurity. Furthermore, regular review and updates of these frameworks are necessary to keep pace with the rapid advancements in AI technology.

Policy Document: Best Practices for Responsible AI Cybersecurity Collaboration

A policy document outlining best practices for responsible AI cybersecurity collaboration should include:

1. Data Privacy and Security: Adherence to relevant data protection regulations (GDPR, CCPA, etc.), implementation of robust data security measures, and minimizing data collection to only what is necessary.

2. Bias Mitigation: Strategies for identifying and mitigating bias in training data and algorithms, ensuring fairness and preventing discriminatory outcomes.

3. Transparency and Explainability: Use of explainable AI (XAI) techniques to provide insights into decision-making processes, allowing for auditing and accountability.

4. Accountability and Oversight: Establishment of clear lines of responsibility, independent audits, and mechanisms for addressing grievances.

5. Human Oversight: Maintaining human control over critical decisions, preventing unintended consequences, and ensuring ethical considerations are paramount.

6. Security and Safety: Robust security measures to protect AI systems from attacks and ensure the reliability and safety of their operation.

7. Collaboration and Information Sharing: Facilitating secure and ethical collaboration among stakeholders to share threat intelligence and best practices.

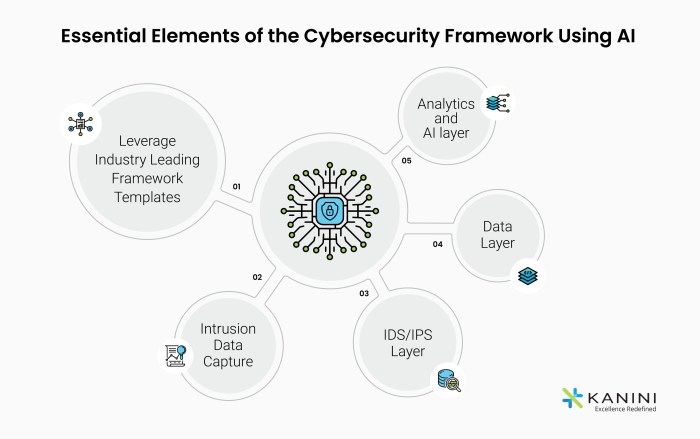

Building a Sustainable Collaborative Ecosystem

Source: kanini.com

Building a lasting AI cybersecurity collaboration requires more than just a one-time agreement; it needs a robust ecosystem that fosters continuous growth and adaptation. This involves strategically nurturing relationships, establishing clear communication channels, and consistently investing in the collaborative effort. Think of it as building a strong, resilient network – one that can weather the inevitable storms of emerging threats and technological advancements.

Strategies for fostering long-term collaboration necessitate a multifaceted approach. It’s not just about signing contracts; it’s about cultivating trust and mutual benefit among all participants. This includes government agencies, private sector companies, academic institutions, and even individual cybersecurity experts. A strong foundation built on shared goals and open communication is key to longevity.

Knowledge Sharing and Capacity Building

Effective knowledge sharing is the lifeblood of a successful collaborative ecosystem. This involves establishing platforms for information exchange, such as secure online forums, regular workshops, and joint research projects. Capacity building is equally crucial, providing training and development opportunities to enhance the skills and expertise of all stakeholders. This could include sponsoring cybersecurity certifications, organizing mentorship programs, or creating shared training materials on the latest threats and technologies. Imagine a scenario where a small company benefits from the expertise of a large corporation, gaining access to advanced threat intelligence and security best practices. This mutual learning strengthens the entire ecosystem.

Continuous Improvement and Adaptation Mechanisms

The cybersecurity landscape is constantly evolving, so the collaborative playbook must be dynamic and adaptable. Regular reviews and updates are essential, incorporating feedback from all stakeholders and adapting to new threats and technologies. This could involve establishing a feedback loop mechanism, where participants regularly assess the playbook’s effectiveness and suggest improvements. For example, a yearly review process could analyze the success rate of collaborative efforts, identify areas needing improvement, and update the playbook accordingly. This ensures the playbook remains relevant and effective in addressing emerging cybersecurity challenges.

Resources and Support Mechanisms for Collaboration

Maintaining a thriving collaborative environment requires dedicated resources and support. This includes allocating sufficient funding for operational costs, providing technical infrastructure, and establishing clear communication channels. For instance, a shared secure platform for communication and data sharing could be established, along with dedicated personnel to manage the collaboration and facilitate communication among participants. Regular meetings and communication protocols are also crucial to ensure everyone stays informed and aligned on shared goals.

Potential Funding Sources and Partnerships

Securing sustainable funding is crucial for long-term collaboration. Potential sources include government grants focused on cybersecurity initiatives, industry partnerships with technology vendors, and philanthropic organizations dedicated to digital security. For example, a government grant could fund a collaborative project focused on developing AI-powered threat detection systems, while a partnership with a cybersecurity company could provide access to advanced technologies and expertise. Collaboration with universities could lead to research grants and access to cutting-edge research. Exploring diverse funding streams ensures the collaborative ecosystem can sustain itself and continue its vital work.

Case Studies and Best Practices

Successful AI cybersecurity collaborations aren’t just theoretical; they’re happening right now, shaping the future of digital security. Learning from both triumphs and failures is crucial for building robust and effective partnerships. This section dives into real-world examples, highlighting key success factors and offering practical advice for replicating positive outcomes. We’ll examine collaborations that have leveraged AI to improve threat detection, incident response, and overall security posture.

Analyzing successful and unsuccessful collaborations reveals crucial patterns. Factors like clear communication, shared goals, and a well-defined division of responsibilities consistently emerge as critical components of successful partnerships. Conversely, a lack of trust, conflicting objectives, or inadequate resource allocation often lead to setbacks. By understanding these dynamics, organizations can proactively mitigate risks and maximize the benefits of AI-powered cybersecurity collaborations.

Successful AI Cybersecurity Collaborations: Examples and Analysis

Several noteworthy collaborations demonstrate the power of AI in bolstering cybersecurity. These case studies highlight diverse approaches, showcasing the adaptability and scalability of AI-driven security solutions.

| Collaboration | Participating Organizations | AI Application | Key Success Factors |

|---|---|---|---|

| Financial Institution Consortium | Five major banks | Shared threat intelligence platform using machine learning to detect and respond to advanced persistent threats (APTs). | Strong leadership, clear data-sharing agreements, regular communication, commitment to joint resource allocation. |

| Government-Industry Partnership | National Cybersecurity Agency and several tech companies | AI-powered vulnerability detection and remediation platform for critical infrastructure. | Well-defined roles and responsibilities, government funding and support, access to sensitive data, proactive threat sharing. |

| Healthcare Data Sharing Initiative | Several hospitals and a cybersecurity firm | AI-driven anomaly detection system for identifying data breaches in medical records. | Prioritization of patient privacy, robust data anonymization techniques, rigorous security protocols, frequent audits. |

Lessons Learned from Unsuccessful Collaborations

Not all AI cybersecurity collaborations are successful. Failures often stem from preventable issues. Understanding these pitfalls is crucial for future endeavors.

One common issue is a lack of trust among participating organizations, hindering the free flow of information. Another frequent problem is the absence of a clear governance structure, leading to confusion and inefficiencies. Inadequate resource allocation, poor communication, and incompatible technical systems also contribute to failed collaborations. Finally, neglecting ethical and legal considerations can lead to serious consequences, undermining the entire initiative.

Recommendations for Replicating Successful Models

Replicating successful AI cybersecurity collaborations requires a strategic approach. Key steps include establishing clear goals and objectives, defining roles and responsibilities, and developing a robust communication plan. Building trust among partners is paramount, requiring transparency and open communication. A well-defined governance structure is essential for ensuring accountability and efficient decision-making. Furthermore, selecting appropriate AI-powered tools and techniques, addressing ethical and legal concerns, and establishing mechanisms for continuous improvement are critical for long-term success.

Investing in robust data sharing mechanisms and establishing clear protocols for data security and privacy are also crucial. Regular assessments of the collaboration’s effectiveness and adjustments based on performance metrics ensure ongoing success. Finally, fostering a culture of collaboration and mutual support among participating organizations is essential for building a sustainable and effective partnership.

Last Recap: Ai Cybersecurity Collaboration Playbook

Ultimately, the AI Cybersecurity Collaboration Playbook underscores the necessity of a unified front against cyber threats. By fostering collaboration between various stakeholders, leveraging AI’s capabilities responsibly, and addressing ethical considerations head-on, we can create a more secure and resilient digital world. This playbook provides the framework, the tools, and the insights to make that happen. Let’s build a future where technology protects, not endangers, us.